在上一章節中,我們瞭解了調度的基本邏輯,並初步認識了相關功能。今天,我們將深入探討如何透過各種方式控制 Pod 的調度,特別是如何將 Pod 指派到特定的節點上,以滿足不同的應用需求和資源條件。這將幫助我們更精確地管理資源,並確保應用程序的穩定性和效能。

通常情況下,Kubernetes 調度器會自動對 Pod 進行合理的放置,但有時我們可能需要進一步控制 Pod 被部署到的節點。為此,我們可以使用以下幾種方法來約束 Pod 在特定的節點上運行,或優先在某些節點上運行。例如,確保 Pod 最終部署在配備 SSD 的節點上,或者將大量通訊的 Pod 放置在同一個可用區,以減少延遲並提高效能。

nodeSelector 是最簡單的節點選擇約束。在 Pod 的規約中新增 nodeSelector 欄位,指定目標節點所需的節點標籤。Kubernetes 只會將 Pod 調度到符合這些標籤的節點上。

nodeSelector 提供了一種基本的節點選擇方法,而親和性與反親和性 (Affinity and Anti-Affinity) 則擴展了這種功能,允許更靈活的約束。這些約束可以根據節點標籤或其他 Pod 的標籤來決定 Pod 的調度。

節點親和性 (Node Affinity) 概念上類似於 nodeSelector 的加強版,一樣是基於 Node Label 來選擇節點但是提供更細部的操作。除了兩種不同的選擇策略外,對於 Label 的決策方式也更加彈性,不是單純的 "Equal" 去比較而已。

目前其提供兩種選擇策略:

requiredDuringSchedulingIgnoredDuringExecution:概念與原先的 NodeSelector 類似,是個硬性條件,若沒有任何節點符合條件則 Pod 就會處於 Pending 狀態。preferredDuringSchedulingIgnoredDuringExecution:相較於彈性,就是盡量滿足即可,若實在沒有滿足則還是可以調度到這個 Node 上。Pod 間親和性與反親和性基於已經在節點上運行的 Pod 的標籤來約束新 Pod 的調度。例如,如果某些 Pod 具有相同的應用標籤(如 app=frontend),我們可以使用親和性來確保這些 Pod 儘量調度到相同的節點上,以減少網路延遲。而反親和性則可以用於確保同一應用的 Pod 分佈在不同節點上,以提高高可用性,防止單點故障。這可以根據拓撲域(如區域、可用區、節點等)來控制 Pod 的分佈。

topologyKey 匹配的標籤。 如果某些或者所有節點上不存在所指定的 topologyKey 標籤,調度行為可能與預期的不同。由於親和性與反親和性的操作都跟 Label 有關係,因此勢必會有人好奇若動態調整 Label 會影響當前運行的 Pod 嗎?IgnoredDuringExecution 這個含意就是字面上的意思,這些決策都會忽略執行期間的 Label 變動,一旦 Pod 被選定指派後,Scheduler 就不會去干涉了。

nodeName 是更為直接的節點選擇方式,優先度比 nodeSelector 和 親和性與反親和性 更高。這種方式特別適合在需要將 Pod 部署到特定節點的場景中使用,例如在單一節點進行測試或應對特殊需求的情況下。使用 nodeName 可以確保 Pod 被精確地分配到指定的節點,從而方便測試和排除故障。當在 Pod 規約中指定 nodeName 欄位時,調度器會忽略該 Pod,而直接將其分配給指定的節點。需要注意的是,如果指定的節點不存在或無法提供所需資源,Pod 可能無法運行。

在 Kubernetes 中,拓撲分佈約束 (Topology Spread Constraints) 是用來控制 Pod 在拓撲域(例如區域、可用區、節點等)之間的分佈,以實現更高的可用性和容錯能力。

以下是 topologySpreadConstraints 的主要欄位:

topology.kubernetes.io/zone。DoNotSchedule 或 ScheduleAnyway。當我們要部署多個 pod ,在我們不使用額外設定干涉 kube-scheduler 情況下,pod 會被均勻分佈在工作節點裡。下面我們來驗證一下。

kubectl get node

---

NAME STATUS ROLES AGE VERSION

wslkind-control-plane Ready control-plane 8d v1.30.0

wslkind-worker Ready <none> 8d v1.30.0

wslkind-worker2 Ready <none> 8d v1.30.0

可以看到叢集有一個主節點,兩個工作節點。

組態檔案: deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: test-deploy

name: test-deploy

spec:

replicas: 6

selector:

matchLabels:

app: test-pod

template:

metadata:

creationTimestamp: null

labels:

app: test-pod

spec:

containers:

- image: nginx

name: nginx

這個組態檔案描述了一個最基本的 deployment。他會部署 6 個 pod。

kubectl apply -f deploy.yaml

kubectl get pod -l app=test-pod -o wide

結果如下

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deploy-7f7cd9b788-2f4fj 1/1 Running 0 60s 10.244.2.26 wslkind-worker2 <none> <none>

test-deploy-7f7cd9b788-d6xvc 1/1 Running 0 60s 10.244.2.27 wslkind-worker2 <none> <none>

test-deploy-7f7cd9b788-jwsxr 1/1 Running 0 60s 10.244.2.25 wslkind-worker2 <none> <none>

test-deploy-7f7cd9b788-mfwg8 1/1 Running 0 60s 10.244.1.4 wslkind-worker <none> <none>

test-deploy-7f7cd9b788-p228s 1/1 Running 0 60s 10.244.1.5 wslkind-worker <none> <none>

test-deploy-7f7cd9b788-sqdm4 1/1 Running 0 4s 10.244.1.6 wslkind-worker <none> <none>

可以看到,Pod 確實被平均分配給兩個工作節點。

kubectl delete -f deploy.yaml

本章節的實作中,我們會需要透過標籤來選擇 wslkind-worker2 節點,因此需要事先打上標籤。

kubectl get node wslkind-worker2 --show-labels

結果如下

NAME STATUS ROLES AGE VERSION LABELS

wslkind-worker2 Ready <none> 8d v1.30.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=wslkind-worker2,kubernetes.io/os=linux

kubectl label nodes wslkind-worker2 disktype=ssd

kubectl get node wslkind-worker2 --show-labels

結果如下

NAME STATUS ROLES AGE VERSION LABELS

wslkind-worker2 Ready <none> 8d v1.30.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=ssd,kubernetes.io/arch=amd64,kubernetes.io/hostname=wslkind-worker2,kubernetes.io/os=linux

節點確實帶有 disktype=ssd。

也可以使用 --label-columns (-L) 參數列出指定標籤:

kubectl get node wslkind-worker2 -L disktype

結果如下

NAME STATUS ROLES AGE VERSION DISKTYPE

wslkind-worker2 Ready <none> 8d v1.30.0 ssd

可以看到 wslkind-worker2 節點已有 disktype=ssd 標籤。

組態檔案: deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: test-deploy

name: test-deploy

spec:

replicas: 5

selector:

matchLabels:

app: test-pod

template:

metadata:

creationTimestamp: null

labels:

app: test-pod

spec:

containers:

- image: nginx

name: nginx

nodeSelector:

disktype: ssd

此組態檔案描述了一個擁有節點選擇器 disktype: ssd 的 Pod。這表明該 deployment 的所有 Pod 將被調度到有 disktype=ssd 標籤的節點。

kubectl apply -f deploy.yaml

wslkind-worker2 節點上kubectl get pod -l app=test-pod -o wide

結果如下

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deploy-f465f68c4-dbs4z 1/1 Running 0 114s 10.244.2.6 wslkind-worker2 <none> <none>

test-deploy-f465f68c4-fsjkk 1/1 Running 0 114s 10.244.2.9 wslkind-worker2 <none> <none>

test-deploy-f465f68c4-vk28b 1/1 Running 0 114s 10.244.2.8 wslkind-worker2 <none> <none>

test-deploy-f465f68c4-wbzq8 1/1 Running 0 114s 10.244.2.7 wslkind-worker2 <none> <none>

test-deploy-f465f68c4-xgh74 1/1 Running 0 114s 10.244.2.5 wslkind-worker2 <none> <none>

組態檔案: deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: test-deploy

name: test-deploy

spec:

replicas: 5

selector:

matchLabels:

app: test-pod

template:

metadata:

creationTimestamp: null

labels:

app: test-pod

spec:

nodeName: fwslkind-worker2 # 調度 Pod 到特定的節點

containers:

- image: nginx

name: nginx

kubectl apply -f deploy.yaml

wslkind-worker2 節點上kubectl get pod -l app=test-pod -o wide

結果如下

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deploy-6d4c4bcf7f-4z92c 1/1 Running 0 10s 10.244.2.18 wslkind-worker2 <none> <none>

test-deploy-6d4c4bcf7f-fhc5d 1/1 Running 0 10s 10.244.2.17 wslkind-worker2 <none> <none>

test-deploy-6d4c4bcf7f-k4vz6 1/1 Running 0 10s 10.244.2.19 wslkind-worker2 <none> <none>

test-deploy-6d4c4bcf7f-rbdz9 1/1 Running 0 10s 10.244.2.16 wslkind-worker2 <none> <none>

test-deploy-6d4c4bcf7f-sxtht 1/1 Running 0 10s 10.244.2.15 wslkind-worker2 <none> <none>

組態檔案: deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: test-deploy

name: test-deploy

spec:

replicas: 5

selector:

matchLabels:

app: test-pod

template:

metadata:

creationTimestamp: null

labels:

app: test-pod

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: disktype

operator: In

values:

- ssd

containers:

- image: nginx

name: nginx

此組態檔案描述了一個 deployment,內含的 Pod 有一個節點親和性組態 requiredDuringSchedulingIgnoredDuringExecution,disktype=ssd。 這意味著 pod 只會被調度到具有 disktype=ssd 標籤的節點上。

kubectl apply -f deploy.yaml

wslkind-worker2 節點上kubectl get pod -l app=test-pod -o wide

結果如下

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deploy-78768c794b-gz4rc 1/1 Running 0 8s 10.244.2.23 wslkind-worker2 <none> <none>

test-deploy-78768c794b-hpxpk 0/1 ContainerCreating 0 8s <none> wslkind-worker2 <none> <none>

test-deploy-78768c794b-jt8rd 1/1 Running 0 8s 10.244.2.20 wslkind-worker2 <none> <none>

test-deploy-78768c794b-pkh4r 1/1 Running 0 8s 10.244.2.21 wslkind-worker2 <none> <none>

test-deploy-78768c794b-qxzrs 1/1 Running 0 8s 10.244.2.22 wslkind-worker2 <none> <none>

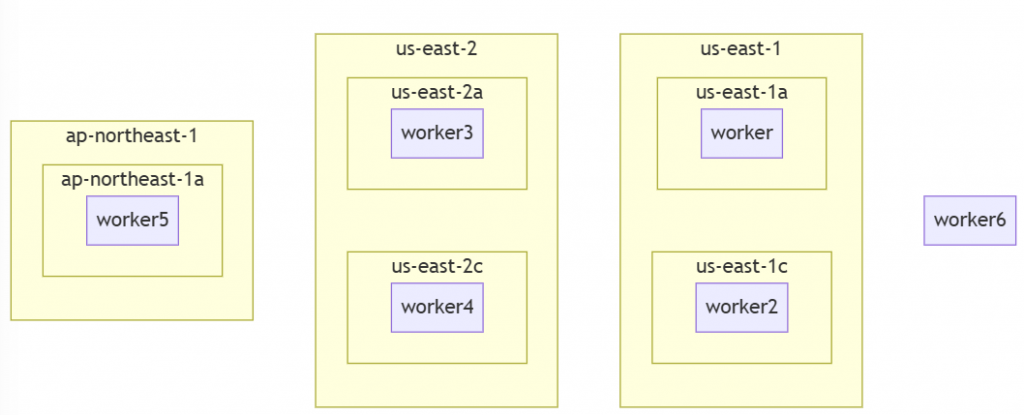

為了更好的呈現拓撲分佈的特性,我們建立新的叢集,來模仿實際生產環境。

示意圖如下

設定檔: multi-region-cluster.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

labels:

topology.kubernetes.io/region: us-east-1

topology.kubernetes.io/zone: us-east-1a

- role: worker

labels:

topology.kubernetes.io/region: us-east-1

topology.kubernetes.io/zone: us-east-1c

- role: worker

labels:

topology.kubernetes.io/region: us-east-2

topology.kubernetes.io/zone: us-east-2a

- role: worker

labels:

topology.kubernetes.io/region: us-east-2

topology.kubernetes.io/zone: us-east-2c

- role: worker

labels:

topology.kubernetes.io/region: ap-northeast-1

topology.kubernetes.io/zone: ap-northeast-1a

- role: worker

這是一個 KinD 設定檔,裡面包含了 5 個工作節點,透過標籤定義,分配給 3 個 Region。另外有 1 個工作節點,並沒有定義 Region 和 Zone。

kind create cluster --name multi-region-cluster --config multi-region-cluster.yaml

當我們使用 KinD 建立新的叢集,KinD 會自動幫我們產生合併對應的 context 內容,並且切換到剛建立的 context。

kind get clusters

---

multi-region-cluster

wslkind

kubectl get no -L topology.kubernetes.io/region,topology.kubernetes.io/zone

輸出類似如下:

NAME STATUS ROLES AGE VERSION REGION ZONE

multi-region-cluster-control-plane Ready control-plane 42s v1.30.0

multi-region-cluster-worker Ready <none> 20s v1.30.0 us-east-1 us-east-1a

multi-region-cluster-worker2 Ready <none> 21s v1.30.0 us-east-1 us-east-1c

multi-region-cluster-worker3 Ready <none> 20s v1.30.0 us-east-2 us-east-2a

multi-region-cluster-worker4 Ready <none> 20s v1.30.0 us-east-2 us-east-2c

multi-region-cluster-worker5 Ready <none> 21s v1.30.0 ap-northeast-1 ap-northeast-1a

multi-region-cluster-worker6 Ready <none> 20s v1.30.0

來設想一下,我們的叢集在全球擁有多個節點,分佈在多個 Region 和 Zone。現在我們要部署最新的 Web 應用,打算平均的部署在 Region 上。

組態檔案: deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: test-deploy

name: test-deploy

spec:

replicas: 20

selector:

matchLabels:

app: test-pod

template:

metadata:

creationTimestamp: null

labels:

app: test-pod

spec:

topologySpreadConstraints:

- maxSkew: 1

topologyKey: "topology.kubernetes.io/region"

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

app: test-pod

containers:

- image: nginx

name: nginx

上述組態檔案描述了一個 Deployment,透過 topologySpreadConstraints 欄位,我們設定:

以 topology.kubernetes.io/region 這個標籤為基礎

每個標籤之間,pod 數量相差不能超過 1 個

沒有標籤的節點不調度

建立 deployment

kubectl apply -f deploy.yaml

kubectl get pod -l app=test-pod -o wide --sort-by=.spec.nodeName

--sort-by=.spec.nodeName參數的意思是依照 nodeName 欄位排序,方便查看。

結果如下

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deploy-567755cd66-57ld7 1/1 Running 0 115s 10.244.4.3 multi-region-cluster-worker <none> <none>

test-deploy-567755cd66-vs75b 1/1 Running 0 115s 10.244.4.2 multi-region-cluster-worker <none> <none>

test-deploy-567755cd66-5gffm 1/1 Running 0 115s 10.244.1.3 multi-region-cluster-worker2 <none> <none>

test-deploy-567755cd66-wkzfq 1/1 Running 0 115s 10.244.1.2 multi-region-cluster-worker2 <none> <none>

test-deploy-567755cd66-5rmnm 1/1 Running 0 115s 10.244.5.3 multi-region-cluster-worker3 <none> <none>

test-deploy-567755cd66-r5qtc 1/1 Running 0 115s 10.244.5.2 multi-region-cluster-worker3 <none> <none>

test-deploy-567755cd66-dmmvw 1/1 Running 0 115s 10.244.3.2 multi-region-cluster-worker4 <none> <none>

test-deploy-567755cd66-z8mt2 1/1 Running 0 115s 10.244.3.3 multi-region-cluster-worker4 <none> <none>

test-deploy-567755cd66-25ndq 1/1 Running 0 115s 10.244.2.2 multi-region-cluster-worker5 <none> <none>

test-deploy-567755cd66-5dw7m 1/1 Running 0 115s 10.244.2.5 multi-region-cluster-worker5 <none> <none>

test-deploy-567755cd66-6ml56 1/1 Running 0 115s 10.244.2.4 multi-region-cluster-worker5 <none> <none>

test-deploy-567755cd66-9dfdb 1/1 Running 0 115s 10.244.2.3 multi-region-cluster-worker5 <none> <none>

可以看到, Pod 很平均的被分佈到 3 個 Region 的節點之間,並且由於 multi-region-cluster-worker6 節點沒有標籤,並沒有被選中調度。

我們來組合之前提到的 NodeAffinity,達成更靈活的應用。

承實作情境題 1,現在由於 us-east-2c 這個 Zone 的節點損壞,我們需要排除這個 Zone。

組態檔案: deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: test-deploy

name: test-deploy

spec:

replicas: 20

selector:

matchLabels:

app: test-pod

template:

metadata:

creationTimestamp: null

labels:

app: test-pod

spec:

topologySpreadConstraints:

- maxSkew: 1

topologyKey: "topology.kubernetes.io/region"

whenUnsatisfiable: DoNotSchedule

labelSelector:

matchLabels:

app: test-pod

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: "topology.kubernetes.io/zone"

operator: NotIn

values:

- us-east-2c

containers:

- image: nginx

name: nginx

kubectl apply -f deploy.yaml

kubectl get pod -l app=test-pod -o wide --sort-by=.spec.nodeName

結果如下

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-deploy-84c6b8c46b-ngczt 1/1 Running 0 26s 10.244.4.6 multi-region-cluster-worker <none> <none>

test-deploy-84c6b8c46b-gzws7 1/1 Running 0 26s 10.244.4.7 multi-region-cluster-worker <none> <none>

test-deploy-84c6b8c46b-phlnx 1/1 Running 0 29s 10.244.4.5 multi-region-cluster-worker <none> <none>

test-deploy-84c6b8c46b-mw6hv 1/1 Running 0 31s 10.244.1.6 multi-region-cluster-worker2 <none> <none>

test-deploy-84c6b8c46b-9tvj2 1/1 Running 0 25s 10.244.1.7 multi-region-cluster-worker2 <none> <none>

test-deploy-84c6b8c46b-ff5t5 1/1 Running 0 32s 10.244.1.5 multi-region-cluster-worker2 <none> <none>

test-deploy-84c6b8c46b-6smxr 1/1 Running 0 32s 10.244.5.6 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-75sgl 1/1 Running 0 32s 10.244.5.7 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-78hcg 1/1 Running 0 26s 10.244.5.10 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-dhb68 1/1 Running 0 32s 10.244.5.8 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-xwqkc 1/1 Running 0 32s 10.244.5.5 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-clkgv 1/1 Running 0 27s 10.244.5.9 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-l58st 1/1 Running 0 32s 10.244.5.4 multi-region-cluster-worker3 <none> <none>

test-deploy-84c6b8c46b-tjhmq 1/1 Running 0 32s 10.244.2.7 multi-region-cluster-worker5 <none> <none>

test-deploy-84c6b8c46b-mlrhp 1/1 Running 0 28s 10.244.2.10 multi-region-cluster-worker5 <none> <none>

test-deploy-84c6b8c46b-5whtr 1/1 Running 0 27s 10.244.2.11 multi-region-cluster-worker5 <none> <none>

test-deploy-84c6b8c46b-jffbj 1/1 Running 0 31s 10.244.2.8 multi-region-cluster-worker5 <none> <none>

test-deploy-84c6b8c46b-6dskd 1/1 Running 0 32s 10.244.2.6 multi-region-cluster-worker5 <none> <none>

test-deploy-84c6b8c46b-tshgm 1/1 Running 0 29s 10.244.2.9 multi-region-cluster-worker5 <none> <none>

test-deploy-84c6b8c46b-79tkt 1/1 Running 0 26s 10.244.2.12 multi-region-cluster-worker5 <none> <none>

可以看到,這次 multi-region-cluster-worker4 這個處於 us-east-2c Zone 的節點被排除了,並且所有的 Pod 被平均分佈在剩餘合適的節點當中。

記得切換回原來的 context,方便接下來的實作:

kubectl config use-context kind-wslkind